Semi-automatic delineation of visible cadastral boundaries from high-resolution remote sensing data

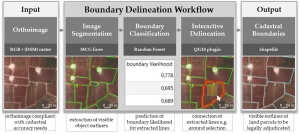

The software tool supports the delineation of visible boundaries by automatically retrieving information from high-resolution optical sensor data captured with UAV, aerial, or satellite platforms. The automatically extracted boundary features are used to support with the subsequent interactive delineation. The tool is designed for areas, in which boundaries are demarcated by physical objects and are thus visible. The tool focuses on improving current indirect surveying by simplifying facilitates image-based cadastral mapping by making use of image analysis and machine learning.

The source code be found on GitHub and can be used free of costs. Our GitHub wiki provides guidance on how to use each of the steps in the delineation tool as well as test data. Our work is explained at this talk at the FOSS4G 2019 in Bucharest.

Recent Comments